- Introduction

- History of data centers

- Types of data centers

- Design of a data center

- Cooling

- Availability

- Energy usage

- Water Usage

- Heat reuse

- Key Performance Indicators

- Connectivity

Introduction

Before I want to start talking about data centers, I want to talk briefly about the internet. For many people, the internet is something you take for granted. But what is the internet anyway? The internet consists of many different networks that are connected to each other, and you are connected with your device which allows you to interact via an app, browse to a website, stream a video or send an email, for example.

All these different networks come together in data centers and while you are surfing on the internet, you are connected to the servers which are needed for all the apps, videos, chatting, emails etc.

The data centers can be seen as the building blocks of the internet with an lot of different connections.

History of data centers

In the early days of the Internet (which is the successor to the Arpanet), there were no data centers yet, but the first Internet nodes came along. In these internet nodes, many connections / networks came together and there were many modems with which you could dial in via the telephone line. The internet was then widely used to obtain information, and that information was not on servers in data centers but still often on a computer somewhere under a desk connected via a modem.

The growth of the internet and the demand for continuous availability of servers resulted in the use of small data centers called computer rooms. These were the forerunners of data centers that often had a separate cooling system and were also often equipped with a UPS system.

These computer rooms often did not yet consider the redundancy of the installations. More on this later.

Two nice examples from old datacenters which used to be and still are important for connectivity are the NIKHEF in Amsterdam and former Palo Alto Internet Exchange, currently known as Equinix SV8 data center in Palo Alto.

Types of data centers

Before the internet, there were many businesses using computers. At that time, not everyone had their own computer as we know it today but there was often a mainframe in a computer room and staff used terminals. These were more or less very simple minicomputers that connected to mainframes.

More and more companies have said goodbye to their own computer rooms for various reasons. The introduction of the personal computer with Windows 95 was a game changer. This made mainframes and terminals obsolete. Due to the huge demand for more server capacity, the server rooms not only had to become bigger, but more energy was also needed for these servers. This resulted in more UPS and cooling capacity. With the introduction of data centers, it became more interesting for many companies to move the IT environment from having it on premise to a data center. Data centers not only had a fast internet connection, but availability could also be better guaranteed because the installations had a higher degree of redundancy. In addition, it was also ultimately cheaper for many companies to move IT to a data center.

The first internet node points were small spaces whose main purpose was to connect networks. Also called Points of Presence (PoP).

Just a few old data centers are still operating, mainly because of the connectivity to and inside the data center.

Today, we mainly know the following data centers:

Single tenant /Enterprise data centers

These data centers or computer rooms are owned and operated by one company and houses the IT equipment of this company on-premise.

Colocation data centers

Colocation data centers are the most common. In a colocation data center, anyone can rent one or more cabinets and install IT equipment.

Within colocation data centers, there is a category that differs slightly from this. This concerns the colocation data centers (PoP) that were and still are mainly used for many connections. There are therefore not many servers installed in these data centers but very many routers and switches that provide connectivity within this and other data centers. In these datacenters there are often Internet Exchanges, which are of great importance for the connectivity worldwide. A nice and current overview of the internet exchanges can be found on the Internet Exchange Map

Hyperscale data centers

In addition to colocation data centers, these days you have hyperscale data centers. Hyperscale data centers are not only very large but also consume a lot of energy. Hyperscale data centers are much built, managed and used by one organization. Some examples are Microsoft, Google & Facebook / Meta.

Main differences between the colocation and hyperscale data centers

Colocation and hyperscale data centers can’t be compared with each other because of multiple reasons

The biggest difference between colocation and hyperscale data centers comes from the type of customer. In a hyperscale data center, the organization itself is the user and can therefore coordinate the data center infrastructure in advance with the IT equipment to be installed, unlike a colocation data center.

The different type of customer has effect on the following

- Design

- Utilization / capacity

- Operating temperatures

- Centralized to de-centralized batteries

Design

When designing a data center, the degree of redundancy is determined in advance. In a colocation data center, concurrent maintainable is in most of the cases the minimum that is designed for. This means that each component can be maintained or replaced without this having an impact on the availability of the IT equipment. The redundancy therefore takes place within the data center. In contrast to colocation data centers, hyperscale data centers are often designed with a lower degree of redundancy. If there is a problem with the data center infrastructure in a hyperscale data center, active use of software on the IT equipment can be switched to another data center. The difference is therefore in the location of the redundancy. In or outside the data center boundary.

Customers of colocation data centers expect a high degree of redundancy, which is the reason why they install the IT equipment in a colocation data center.

Data centers were originally designed, built and managed based on availability and nowadays we must find the balance between availability and sustainability. Nothing wrong with that but we know that when more components are needed to ensure higher availability, this will result in a less efficient data center. More redundancy means more required data center infrastructure components that cause more energy to be consumed and therefore result in a lower degree of sustainability.

You could perhaps argue that the PUEs of two hyperscale data centers should be added together to compare this to a colocation data center.

Utilization / Capacity

A customer in a colocation data center contracted an amount of electricity that may be used. In most situations, actual usage is lower than contractually specified.

Maximum energy savings are achieved when the data center infrastructure, such as cooling and UPS systems, are fully loaded.

In a colocation data center, utilization is always lower than desired because the sold capacity must be reserved for the customers so that they can use the contracted capacities later.

In a hyperscale organization, the data center infrastructure can be fully loaded, resulting in maximum energy efficiency.

Operating temperatures

Hyper scale organization can operate their data centers with higher temperatures which makes them more energy efficient. Colocation data centers are struggling to increase these temperatures. It could also be that the hyperscale data centers operate their IT equipment in their own hyperscale data centers on a high temperature and they request lower temperatures for their IT equipment in colocation data centers. Because of for example connectivity, these hyper scale organization also need to have presence in colocation data centers.

Centralized to de-centralized batteries

In hyperscale data centers we often see that the battery that is used in case of failure is part of the IT equipment. When this is applied, the inefficiency of batteries is no longer included in the efficiency of the data center according to the PUE (Power Usage Effectiveness) calculation. The efficiency calculation of a hyperscale data center that makes the batteries part of the IT equipment measures less than a colocation data center at that time, resulting in an apparently better PUE.

Design of a data center

In the period around 1998 – 2000 the first commercial data centers came, however much has changed since then. The first data centers were not specifically built for this application. In many situations a data space was created in an existing building.

Nowadays data center designs are applied to the different functions and size, electricity connection, cooling, integration in the built environment, security are important points of attention in the design and construction of a data center.

Data centers are designed, built and commissioned in a relatively short period of time. However, this often happens in several phases, with more and more capacity being built and installed as demand increases. Contrary to how people think in different countries, data centers are often not just big, grey buildings that are very ugly. Of course, these are there but in general, attention has been paid to making the building look good. The building must of course comply with legal regulations but in many situations data center organizations go even further to look good.

From a sustainability point of view, it is very unfortunate that many data centers are built in the wrong places, mostly in places where the often sustainably created heat cannot easily be sustainably reused by another company/organization or homes. This is not surprising, as heat reuse has never been high on the agendas of either the data center industry or other stakeholders. For the reuse of heat from a data center, it would therefore be very good if the data centers had one or more users for heat at a relatively short distance.

Cooling

Datacenters Explained does not discuss the different cooling methods, I would like to refer you to the following video from the Engineering Mindset

Availability

Datacenters are design, build and operated originally for availability. These days we need to find the balance between availability and sustainability. Yes, we can do both.

In datacenters it’s all about redundancy. The topology in N is key in this. N represents the “Need” required for operation.

- Tier 1 : 99. 671% or less than 28.8 hours of downtime per year

- Tier 2 : 99.741% or less than 22 hours of downtime per year

- Tier 3 : 99. 982% or less than 1.6 hours of downtime per year

- Tier 4 : 99. 995% or less than 26.3 minutes of downtime per year

The topology is well described on the following article from the Uptime Institute

Energy usage

A lot of energy is consumed in data centers, the question is what exactly the energy consumers are. The media often talks about the enormous energy consumption of the data centers themselves and in recent years there has been a trend that data centers are being banned for various reasons, besides the energy consumption also the water consumption and the fact that they are such ugly boxes.

Are the data centers responsible for energy and water consumption or are the end users, i.e. society?

Most of the energy in a data center is not used by the data center infrastructure, but by the IT equipment for many years already

Energy Efficiency

Find more statistics at Statista

Before 2007, the average Power Usage Effectiveness (PUE) was 2.5 or even higher, but what does a PUE of 2.5 mean?

With a PUE of 2.5, the total energy of a data center is 2.5 energy units, with 1.0 unit allocated to the IT equipment. So 1.5 more energy is needed for the data center that is not used directly by the IT equipment but by the data center infrastructure to ensure that the 1.0 can be used by the IT equipment.

According to the Uptime Institute, the average PUE worldwide is 1.56 in 2024. This means that 0.56 units of energy are still needed for 1.0 unit of energy for the IT equipment.

The 1.56 is a worldwide average, there are already many data centers with an average PUE between 1.1 and 1.25

Years ago, the data center industry made a huge improvement.

The fact is that less progress has been made in recent years. There is always room for improvement, but the question is to what extent a data center can continue to optimize, taking into account redundancy, costs, but also customer requirements.

The question is, should the data center organization continue to optimize or is it now the turn of the IT industry?

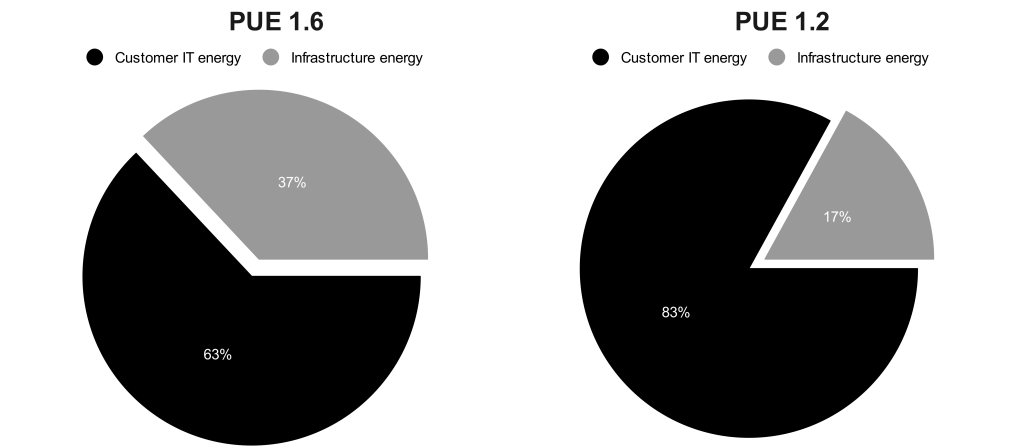

The pie charts show the impact of a high PUE of 1.6 and a low PUE of 1.2

On average, with a PUE of 1.6, only 63% of the total energy consumption in a data center can be used by IT, compared to 83% with a PUE of 1.2

Water Usage

A few years ago, people were surprised about the water consumption in data centers and it is still not clear to many people why data centers can have a water consumption.

Evaporating water is the most energy efficient process to cool a data center.

For many years, evaporating water by, for example, a cooling tower was a logical step, after all, there was more than enough water of good quality available for a low price. This is no longer the case, which is why data centers have been in the news a lot about their water consumption.

Whether a data center still needs to evaporate water for cooling should depend on local conditions, as opposed to a strategic decision based on technology or personal preference.

We will have to look more for the balance between energy and water use in data centers. I have written an article about this before

Types of evaporative cooling

The different types of evaporative cooling can be roughly divided into the following categories:

- Year round evaporative only (1.5 – 2.0 l/kWh)

- Hybrid cooling (0.5 – 1.0 l/kWh)

- Adiabatic assist (0.2 – 0.5 l/kWh)

Note: these are indications, actual consumption may differ from this

As you can see above, water consumption varies per category. It is essential to understand the different evaporative cooling techniques before we can talk about the efficiency of water consumption in the data center.

In short, the goal is to evaporate as much of the total water intake as possible, the actual cooling process.

In addition to the amount of water that evaporates, a quantity of water will also be discharged, this water contains a higher percentage of minerals and in most cases a small amount of chemicals. Furthermore, the water quality that is discharged must comply with the applicable local regulations. It may be said that this water is minimally contaminated.

This quantity of water is more or less used to clean and flush the evaporative cooling system.

If the system is not cleaned, this will have several negative effects:

- The system will be contaminated and after a while will be able to provide less or no cooling capacity.

- Bacteria can form in the water, such as legionella, which can then not only make people sick but also kill them.

Correct maintenance and management of evaporative cooling systems is therefore of great importance.

The goal is to evaporate as much water as possible and to reuse the water used for cleaning the system as often as possible before it is discharged. These are the so-called Cycles of Concentration, belonging to the water treatment plant.

Considerations for evaporative cooling

When an organization wants to use evaporative cooling in the data center, the organization would do well to consider the following:

- What will be the water source?

- Third party utility water

- Surface water

- Ground water

- Rain water

- What will be the water type?

- Potable water

- Non-potable water

- Wat is the local water stress according to the Aqueduct Water Risk Atlas 4.0 from the World Resources Institute

- How many times can the water be re-used in the data center boundary?

- Can the data center use recycled water or can the data center become a supplier of recycled water to another process outside the data center boundary?

- Type of cooling: Year-round, hybrid or adiabatic assist

Heat reuse

Data centers have become very effective in cooling heat efficiently in recent years, but that is actually a waste of energy.

How great would it be if the heat from a data center was no longer cooled away in the outside air, but that the heat could be reused outside the data center boundary?

Some examples would be, for example, a heat network for homes, heating a swimming pool or heating an industrial process.

Unfortunately, reusing heat is not so easy to achieve.

When the heat from a data center can be delivered to a process outside the data center boundary, the data center indirectly receives cooling in return. In other words, less heat needs to be cooled, which results in energy savings for the data center.

Many data centers are therefore open to supplying the heat, but why is it still not the success we would like to see?

First of all, there must be a customer in the area who would like to use the heat, unfortunately this is not always the case.

When there is an end user of residual heat, the energy will have to be transported to it and there are often multiple parties involved.

There are often many different stakeholders involved and that makes reuse not easy in many situations. Think of the distribution of costs, distribution of risks, lead times, temperature trajectories, etc.

Energy balance in data centers

Ultimately it’s all about the energy balance in a data center

Key Performance Indicators

Workgroup 1 of the ISO/IEC JTC 1/SC 39 owns and develops new ISO/IEC 30134 Information technology | Data centres | Key performance indicators

The ISO/IEC 30134 provides a range of Key Performance Indicators (KPIs) for both the data center as well as the customer IT equipment.

Objectives of the ISO/IEC 30134:

- Minimization of the energy and other resource consumption

- Maximalization of the customer IT task effectiveness within the data center

- Energy re-use in the form of waste heat

- Use of renewable energy

The KPIs of the ISO/IEC 30134 are designed to be applicable for all types of data centers, technology neutral and geographically neutral.

This ISO/IEC 30314 currently covers the following standards:

Note: Some parts have amendments that are not shown here

More about these Key Performance Indicators and standards | frameworks for data centers can be found here

Connectivity

Data centers are the building blocks of the internet, but without connectivity a data center is just a standalone building with IT equipment. Connectivity is therefore of great importance for a data center.

Submarine fiber optic cables

Data centers are connected to each other by means of fiber optic cables. In addition to the fiber optic cables that are buried in the ground on land, the fiber optic cables on the bottom of the seas are not only very important for connectivity, they are also currently in the spotlight because of the rendering harmless of these cables by means of possible sabotage.

The Telegeography has a nice and up-to-date map of the submarine fiber optic cables.

A nice video has been published by Tech Vision which explains and shows more about these submarine fiber optic cables.